How about more insights? Check out the video on this topic.

Introduction

In the ever-evolving landscape of cloud computing, the challenges of scaling infrastructure have taken on new dimensions. While developers now have unprecedented access to powerful cloud hardware, the complexity of application processing demands has skyrocketed. This juxtaposition presents a unique set of challenges and opportunities for modern tech professionals.

In a recent webinar, Zhehui Zhou, a Developer Advocate at DragonflyDB, delved into this critical topic, offering fresh perspectives on scaling strategies in the cloud era. This blog post distills the key insights from Zhou’s presentation, exploring how we can rethink our vertical and horizontal scaling approach to achieve greater efficiency, reliability, and performance.

As we navigate through the concepts presented, we’ll examine:

- The evolution of cloud hardware and application demands over the past decade

- Traditional approaches to vertical and horizontal scaling

- Modern strategies for optimizing cloud infrastructure

- Techniques to enhance efficiency, reliability, and performance in scaled systems

Let’s dive into the key takeaways from Zhou’s presentation and discover how we can scale smarter in the modern cloud world.

The evolution of database infrastructure: from mainframes to cloud

The ’90s: the era of monolithic systems

In the 1990s, as financial institutions began their digital transformation, the landscape of database infrastructure was dominated by what industry insiders referred to as “IOE”:

I: IBM servers

O: Oracle databases

E: EMC storage

This trifecta represented the gold standard for stability and reliability, crucial factors for banks dealing with sensitive financial data. These systems are prioritized:

- High levels of transaction isolation

- Complex digital banking experiences

- Robust monthly statement generation

- Prevention of issues like double spending

The 2000s: the rise of open source and Internet companies

As we entered the new millennium, a shift occurred in the database landscape:

- Young internet companies emerged with limited funding

- Open-source software began to boom

- MySQL and PostgreSQL gained popularity as alternatives to commercial databases

Key drivers of change:

- Cost-effectiveness of open-source solutions

- Scalability needs of rapidly growing user bases

- Innovation-driven culture of Internet startups

Companies like Facebook faced unprecedented challenges:

- Dealing with millions, potentially billions, of users in a short time frame

- Single MySQL instances prove inadequate for massive loads

- Introduction of database sharding as a scaling solution

This era marked the beginning of a paradigm shift in how we approach database scalability, setting the stage for the cloud-native solutions we see today.

The paradigm shift: from sharding to cloud computing

The limitations of early scaling solutions

As internet companies proliferated in the early 2000s, they faced new challenges:

- Eventual consistency became more acceptable for social networking applications

- Database sharding emerged as a common scaling solution

- Compromises in SQL database benefits:

- Reduced transaction capabilities

- Weakened constraint enforcement

Hardware evolution and its impact

The mid-2000s saw significant changes in hardware capabilities:

- Transition from single-core to multi-core processors

- Introduction of hyper-threading technology

- Shift from Moore’s Law focusing on transistor count to increasing core count

This hardware evolution necessitated changes in software design to leverage multi-core architectures effectively.

The rise of hyperscalers and distributed systems

Companies like Google faced unprecedented scaling challenges:

- Traditional solutions inadequate for massive traffic loads

- Development of custom solutions:

- Google File System (GFS)

- MapReduce framework

- BigQuery for distributed data processing

These innovations laid the groundwork for modern distributed systems and cloud computing.

The cloud computing era: a new paradigm

The AWS revolution

Amazon Web Services (AWS) introduced a range of services that transformed the infrastructure landscape:

- S3 (Simple Storage Service)

- SQS (Simple Queue Service)

- EC2 (Elastic Compute Cloud)

- SimpleDB

- Elastic Block Storage

These foundational services paved the way for more abstract, higher-level offerings in AI, serverless computing, and beyond.

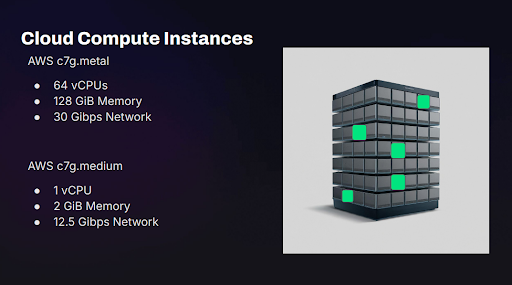

Modern cloud instances: power and flexibility

Today’s cloud instances offer unprecedented power and flexibility:

- Example: AWS C7G family

- C7G metal: 64 vCPUs, 128 GB memory, potentially dedicated hardware

- C7G medium: Smaller, more flexible options

This range of options allows businesses to choose the right balance of power, cost, and scalability for their specific needs.

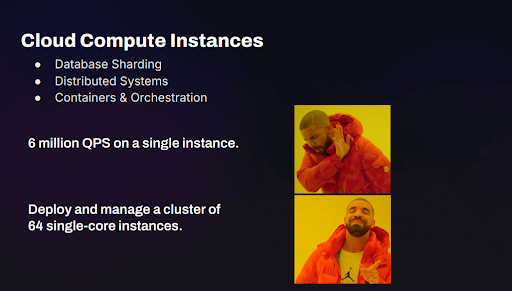

Vertical vs. Horizontal scaling: rethinking our approach

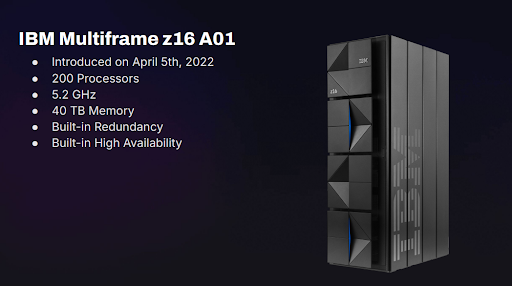

The power of modern vertical scaling

While distributed systems have become the norm, it’s crucial to reconsider the potential of vertical scaling in today’s context:

- The IBM Z Platform: A Modern Mainframe Marvel

- 200 processors at 5.2 GHz each

- 40 terabytes of memory

- Built-in redundancy and high availability

- Potential to run high-performance databases like Postgres and FerretDB

This level of vertical scaling could potentially support significant growth for 10-15 years without major performance issues.

The hidden costs of horizontal scaling

While horizontal scaling offers benefits, it’s important to consider its drawbacks:

- Resource duplication

- Multiple instances of microservices consume duplicated resources

- Each service requires its own memory for configuration files

- Connection management

- Database connections (e.g., to Postgres, Redis, or Dragonfly) are not shared between services

- Careful calculation needed to optimize connection numbers and prevent resource waste

- Inter-service communication overhead

- Services often communicate via RPC calls over the network

- Potential for increased latency, especially if traffic leaves and re-enters the cloud

- Complexity in resource allocation

- Need for precise calculation of service deployments and database connections

Balancing act: when to choose horizontal scaling

Despite these challenges, horizontal scaling remains valuable in certain scenarios:

- When workloads exceed the capabilities of even the most powerful single machines

- For applications requiring geographical distribution

- To achieve specific reliability and fault tolerance goals

Best practices for scaling in the cloud

To optimize your scaling strategy:

- Assess your needs: Carefully evaluate your application’s requirements before defaulting to a distributed architecture

- Consider hybrid approaches: Combine vertical and horizontal scaling for optimal performance and cost-efficiency

- Optimize resource usage: In microservices architectures, focus on efficient resource allocation and connection sharing

- Monitor and adjust: Continuously monitor performance and adjust your scaling strategy as needs evolve

The hidden complexities of cloud scaling

As we delve deeper into cloud scaling strategies, it’s crucial to understand some of the less obvious challenges that can significantly impact performance and efficiency.

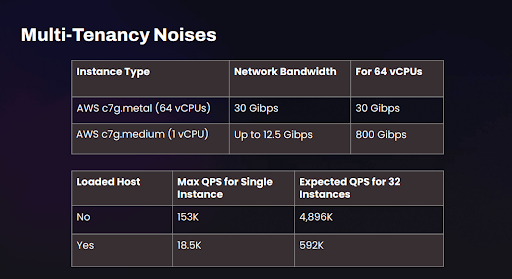

Multi-tenant noise: the noisy neighbor problem

One of the most significant issues in cloud computing is the impact of multi-tenancy on performance:

Network bandwidth allocation

- C7G metal instance (64 vCPUs): 30 Gbps

- C7G medium instance (1 vCPU): Up to 12.5 Gbps per vCPU

- 64 medium instances: Potential 800 Gbps total

This disproportionate allocation is designed to accommodate the increased network traffic in microservices architectures.

Performance unpredictability

- Shared resources between different companies on the same physical hardware

- In-memory data stores (e.g., Redis, Dragonfly) are particularly susceptible to noisy neighbors

- Potential performance drops of up to 90% due to noisy neighbors

The amplification of performance issues in distributed systems

When scaling horizontally, the noisy neighbor problem can aggravate:

- Lower performance baseline across multiple instances

- Difficulty in achieving expected capacity multiplication

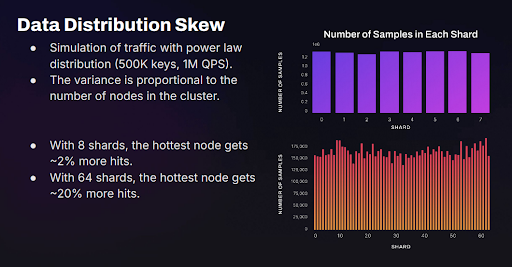

Data distribution challenges in sharded systems

As we scale horizontally, data distribution becomes increasingly complex:

Simulation results

- 8 shards: Relatively even distribution (±2% variation)

- 64 shards: Less even distribution (up to 20% variation)

This uneven distribution can lead to:

- Hot nodes receiving disproportionately more hits

- Potential performance bottlenecks

- Increased complexity in load balancing

Strategies for Mitigating Cloud Scaling Challenges

To address these issues, consider the following approaches:

1. Hybrid scaling strategies

- Combine vertical and horizontal scaling to balance performance and flexibility

- Use larger, dedicated instances for critical, performance-sensitive components

2. Advanced monitoring and analytics

- Implement robust monitoring to identify noisy neighbors and performance anomalies

- Use predictive analytics to anticipate and mitigate potential issues

3. Adaptive load balancing

- Develop intelligent load-balancing algorithms that can adapt to uneven data distribution

- Implement dynamic sharding strategies that can rebalance data in real-time

4. Performance isolation techniques

- Utilize cloud providers’ isolation features where available

- Consider dedicated instances for critical workloads

5. Optimize data distribution

- Regularly analyze and optimize your sharding strategy

- Consider using consistent hashing or other advanced distribution algorithms

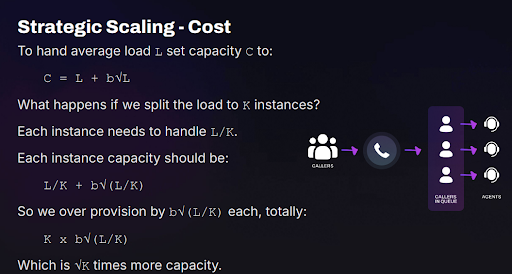

The mathematics of scaling: balancing capacity and efficiency

To truly understand the implications of scaling choices, let’s delve into some mathematical concepts that underpin capacity planning in distributed systems.

Over-provisioning in single vs. distributed systems

Consider the following scenario:

- Average load: L

- Desired capacity: C

- Over-provisioning formula: C = L + constant * √L

In a single-instance setup, the over-provisioning factor might be around 10% of the load. However, when we distribute this load across K instances, the math changes significantly:

- Each instance handles L/K load

- Over-provisioning for each instance: C = L/K + constant * √(L/K)

- Total cluster over-provisioning: K * (constant * √(L/K))

Comparing this to the single-instance formula, we find that a distributed system requires √K times more capacity for over-provisioning.

Practical Implications

This mathematical reality translates to real-world considerations:

- A 10% over-provisioning factor for a single instance doesn’t simply divide across multiple instances

- Each smaller instance in a distributed system likely needs its own 10% over-provisioning buffer

- This leads to a higher total over-provisioning requirement in distributed systems

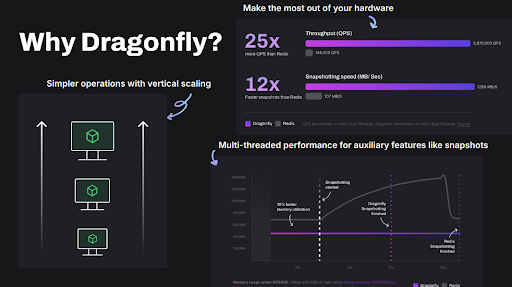

Dragonfly: pushing the boundaries of in-memory data stores

As we’ve explored the nuances of scaling strategies, it’s worth examining a specific case study that embodies many of the principles we’ve discussed. Dragonfly, a modern alternative to Redis, offers valuable insights into how vertical scaling can be maximized before resorting to horizontal distribution.

Redis: A pioneer with limitations

Redis, despite its widespread popularity and effectiveness, was designed 15 years ago and faces certain limitations:

- Core functionality remains single-threaded, limiting full utilization of multi-core hardware

- Requires clustering even on a single powerful machine to leverage multiple cores

- Uses a system fork call for snapshotting, potentially causing significant memory spikes

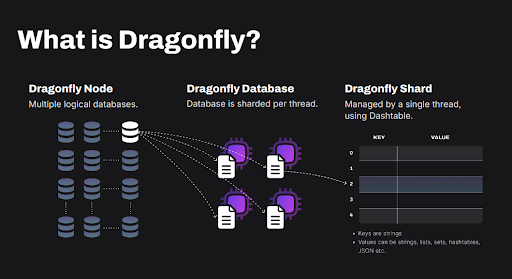

Dragonfly: reimagining in-memory data stores

Dragonfly addresses these limitations while maintaining compatibility with Redis:

- Full multi-core utilization:

- Designed to leverage modern multi-core architectures

- Achieves 4 to 6.43 million QPS with up to 1 terabyte of in-memory data load

- Efficient multi-threading:

- Supports multiple logical databases

- Within each database, operations are multi-threaded

- Ensures atomic operations for individual keys

- Innovative data structures:

- Utilizes the Dash Table for efficient in-memory data management

- Gradual bucket addition instead of full rehashing

- Improved snapshotting:

- Uses a versioning-based algorithm

- Avoids significant memory spikes during snapshots

- Operational simplicity:

- Single binary can fully utilize a powerful multi-core machine

- Reduces the need for premature clustering

The evolution of distributed systems

This case study reflects broader trends in the evolution of distributed systems:

- Early days: Manual sharding in application code

- Modern era: Robust distributed systems (GFS, Cloud Spanner, TiDB, CockroachDB)

- Future direction: Balancing vertical scaling with distributed architectures

Conclusion: embracing intelligent scaling in the modern era

As we’ve journeyed through the complexities of scaling strategies, from the mathematical intricacies to real-world case studies like Dragonfly, one thing becomes clear: the path to efficient scaling is not a one-size-fits-all solution, but rather a thoughtful, tailored approach.

In the end, the most successful scaling strategies are those that balance cutting-edge technology with practical business realities. By understanding the nuances of both vertical and horizontal scaling, and by leveraging innovative solutions that push the boundaries of single-instance performance, you can create a robust, efficient, and future-proof infrastructure.

We extend our sincere gratitude to Zhehui Zhou for sharing his profound insights and expertise on this critical topic. His deep understanding of distributed systems, coupled with his innovative work at Dragonfly, has provided us with a fresh perspective on scaling strategies. Zhehui’s willingness to challenge conventional wisdom and his commitment to pushing the boundaries of technology serve as an inspiration to all of us in the field.

Related posts

The importance of interoperability and compatibility in database systems

How about more insights? Check out the video on this topic.The cloud has revolutionized how we store and access data. However, with a growing number of cloud-based tools and services,...

NoSQL: Why and When to Use It

How about more insights? Check out the video on this topic.Traditional SQL databases have long been the industry standard, but as modern applications demand more flexibility and...

Data Visualization Difficulties in Document Databases

How about more insights? Check out the video on this topic.Document databases have rapidly gained popularity due to their exceptional flexibility and scalability. However, effectively...

Redis Alternatives Compared: What Are Your Options in 2024?

How about more insights? Check out the video on this topic.The recent license change by Redis Ltd. has stirred significant discussion within the tech community, prompting many to seek...

MongoDB Cluster Provisioning in Kubernetes: Deep Dive Demo with Diogo Recharte

Dive into the intricacies of provisioning a MongoDB cluster in Kubernetes with Diogo Recharte. Gain valuable insights and practical tips for seamless deployment and management.

How to provision a MongoDB cluster in Kubernetes: Peter Szczepaniak’s Tips

In this blog post, we’ll dive deeper into Peter’s presentation, exploring the step-by-step process of deploying a MongoDB cluster on Kubernetes along with best practices for success.

Elevating Disaster Recovery With Kubernetes-native Document Databases (part 2)

Explore a deep dive into disaster recovery with Nova in action, showcasing Kubernetes-native document databases. Join Maciek Urbanski for an insightful demo.

Elevating Disaster Recovery With Kubernetes-native Document Databases (part 1)

Learn about automating data recovery in Kubernetes with Nova and elevating disaster recovery with Kubernetes-native document databases with Selvi Kadirvel.

JSON performance: PostgreSQL vs MongoDB Comparison

Explore the JSON performance: PostgreSQL vs MongoDB in this comparison. This article summarizes key points, offering a concise comparison of JSON handling in both databases.

Global NoSQL Benchmark Framework: Embracing Real-World Performance

Learn about the Global NoSQL Benchmark Framework and how it embraces real-world performance. Explore insights from Filipe Oliveira, Principal Performance Engineer at Redis.

Subscribe to Updates

Privacy Policy

0 Comments