How about more insights? Check out the video on this topic.

Intro: from theory to action: mastering MongoDB on kubernetes

Ready to move from theory to practice? In the first article, Peter Szczepaniak (Sr. Product Manager at Percona), explored the pros, cons, and considerations of kubernetes for databases. Now, join me Diogo Recharte (Backend Software Engineer at Percona), as I demonstrate exactly how to bring those concepts to life with a MongoDB deployment on kubernetes. We’ll dive deep into backup and recovery techniques so you can operate your MongoDB database with confidence.

Read the first article by Peter Szczepaniak

Setting the stage

For this demo, everything runs on a Google kubernetes Engine (GKE) cluster. We’ll be using Percona Everest, a private, self-hosted database-as-a-service solution designed for kubernetes. This gives us the flexibility and control that Peter touched on previously.

Creating your database: a few clicks

Let’s dive right in! From the Percona Everest dashboard, I can click “Create Database” and begin the process. We’ll focus on MongoDB for today. I’ll pick a namespace (kubernetes terminology for organizing resources), name my database, and select the desired MongoDB version.

Now, here’s where the benefits of a self-hosted solution start to shine: storage classes. I can choose the type of storage that best fits my needs, thanks to preconfigured options aligned with my kubernetes cluster setup.

Scaling made simple

Next, we address scalability. I can easily choose from horizontal scaling presets (the number of nodes) and vertical scaling options (CPU, memory, disk allocations). Of course, full customization is available if those presets don’t perfectly match my requirements.

Backup and recovery: built-in, not an afterthought

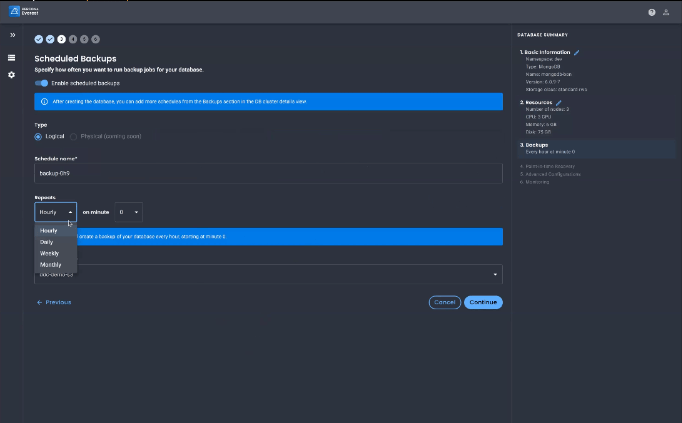

Now for a crucial aspect that sets a self-hosted solution apart: backups. With a few clicks, I can enable backups and set a schedule – let’s say a daily backup at midnight. I select my preferred storage type (here, I’m using S3) for storing those backups.

For even finer control, we have Point-in-Time Recovery (PITR). This means regular uploads of deltas (changes), allowing for more granular restoration points in case I need to go back to a specific moment in time.

Advanced configuration and beyond

We’re not just limited to basic settings! For those needing fine-grained control, you can directly input custom database engine parameters. Need to expose your database externally? Enable external access and limit it for security (perhaps restricting access to specific IP addresses).

Day-two operations: monitoring matters

Monitoring is key, and Percona Everest integrates seamlessly. Simply toggle monitoring, choose your endpoint (like Percona monitoring and management), and metrics will start flowing to your chosen solution.

Deployment in a snap

With our settings in place, all it takes is a click to create the database. While it initializes, you can track its status and access crucial connection details (host, port, credentials, etc.).

Time for a demo! (no cooking show needed)

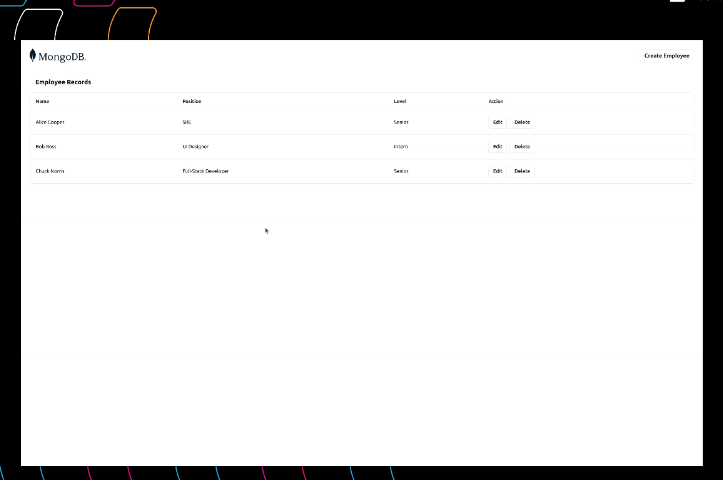

Let’s switch gears to a real-world scenario! I’ve got a pre-provisioned database ready to use. We’ll connect this to a sample MERN stack application (MongoDB, Express, React, Node.js), a simple employee management system I borrowed from MongoDB’s developer website. This lets us add, edit, and delete employee records. Think of it as a demonstration of how your database fits into the bigger picture!

Disaster recovery: when mistakes happen

Uh oh! Our intern Bob Ross might be good at abstract art, but web development isn’t his forte. In trying to delete him from our employee database, we’ve accidentally removed Chuck Norris, our star developer!

Luckily, we have backups! Through Percona Everest, I can easily see recent backups of my database. Since the last one was a few hours ago, a quick restoration should get us back on track. We could even use Point-in-Time Recovery (PITR) for more granular control, but for our demo, a full backup will do.

With just a few clicks, the restoration process begins. (Bigger databases will take longer, of course.) And…it’s done! A quick refresh of our application, and Chuck Norris is back where he belongs. Disaster averted!

The kubernetes challenge: why databases are special

As Peter mentioned earlier, kubernetes wasn’t initially designed for databases. Deployments (meant for stateless apps) won’t cut it. Statefulsets are better, but databases need more than just persistent storage. They need seamless failovers, backups, monitoring – things statefulsets don’t provide.

However, statefulsets do offer advantages: predictable pod deployment and individual storage volumes (PVCs) per pod. These are key characteristics we need for our database solution.

Statefulsets aren’t enough!

Sure, a simple database deployment using statefulsets might be possible, at least in theory. But databases are demanding beasts! Manually creating all the necessary kubernetes objects (services, configmaps, secrets, etc.) would be a nightmare, especially if we’re talking about something like a sharded MongoDB cluster.

Read the first article by Peter Szczepaniak (Sr. Product Manager at Percona), explored the pros, cons, and considerations of Kubernetes for databases

Operators to the rescue!

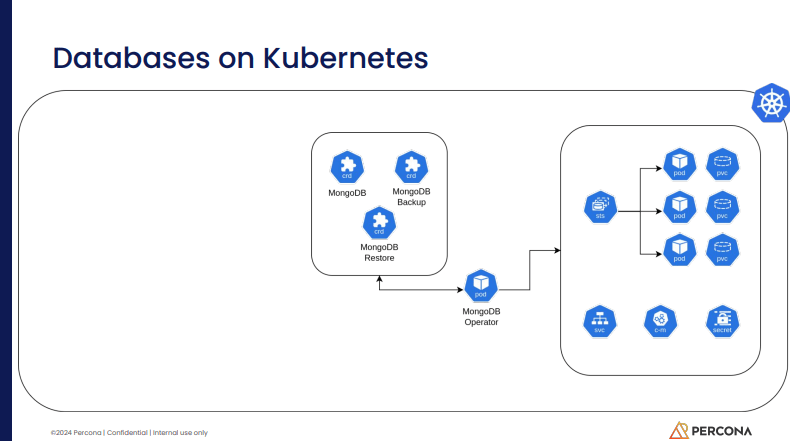

This is where the operator pattern shines. Operators are like specialized controllers running within kubernetes, managing our database deployments with custom logic.

Instead of wrestling with individual kubernetes objects, we define things using custom resources (CRDs) defined by the operator. In our MongoDB example, we might have CRDs like “MongoDB” (for the overall cluster), “backup,” and “restore.” These CRDs contain all the specifics about how we want our database configured, deployed, and managed.

The beauty of abstraction

This approach massively simplifies things! Understanding the CRD schema provides a consistent language for creating database instances, backups, or whatever the operator is designed to handle. The operator, under the hood, translates our high-level instructions into complex kubernetes commands, ensuring everything gets configured perfectly.

Beyond operators: Percona Everest simplifies even more!

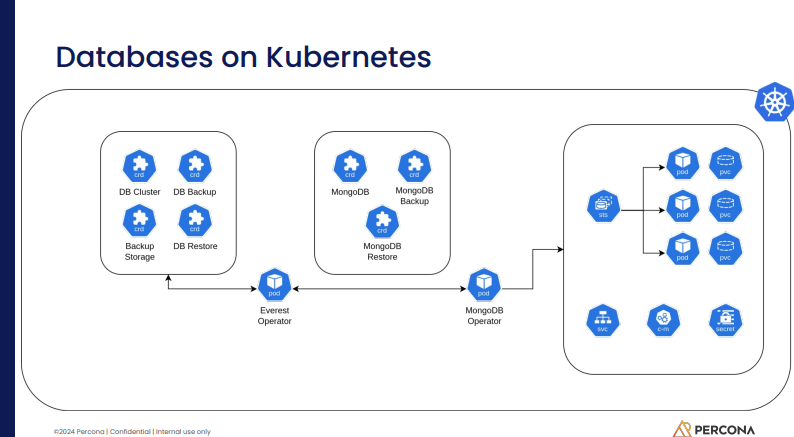

While operators are a significant improvement over manual kubernetes object management, they still have a learning curve. At Percona, we saw a need to further simplify things for users.

Enter Percona Everest! It leverages the solid foundation of the Percona Operator for MongoDB but adds an extra layer of pre-configured options. Think of it as pre-baked expertise. This layer provides sensible defaults for common use cases, reducing the burden on you, the user.

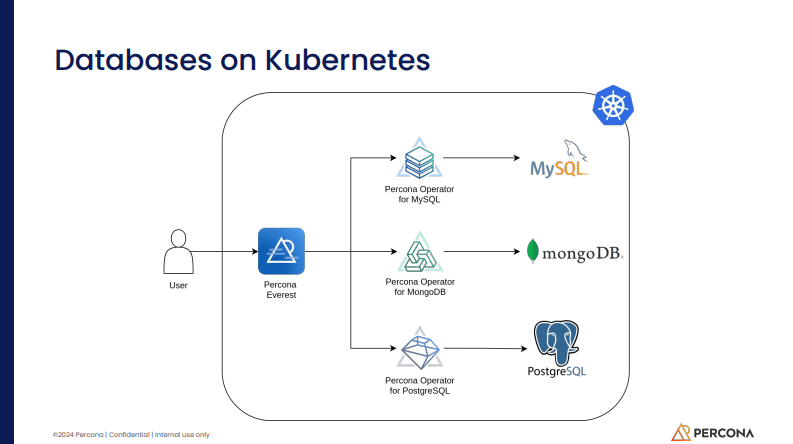

Even better, Everest supports not just MongoDB, but also PostgreSQL and MySQL! This single, easy-to-understand schema lets you deploy any of these databases with minimal effort.

Everest in action: from UI to deployment

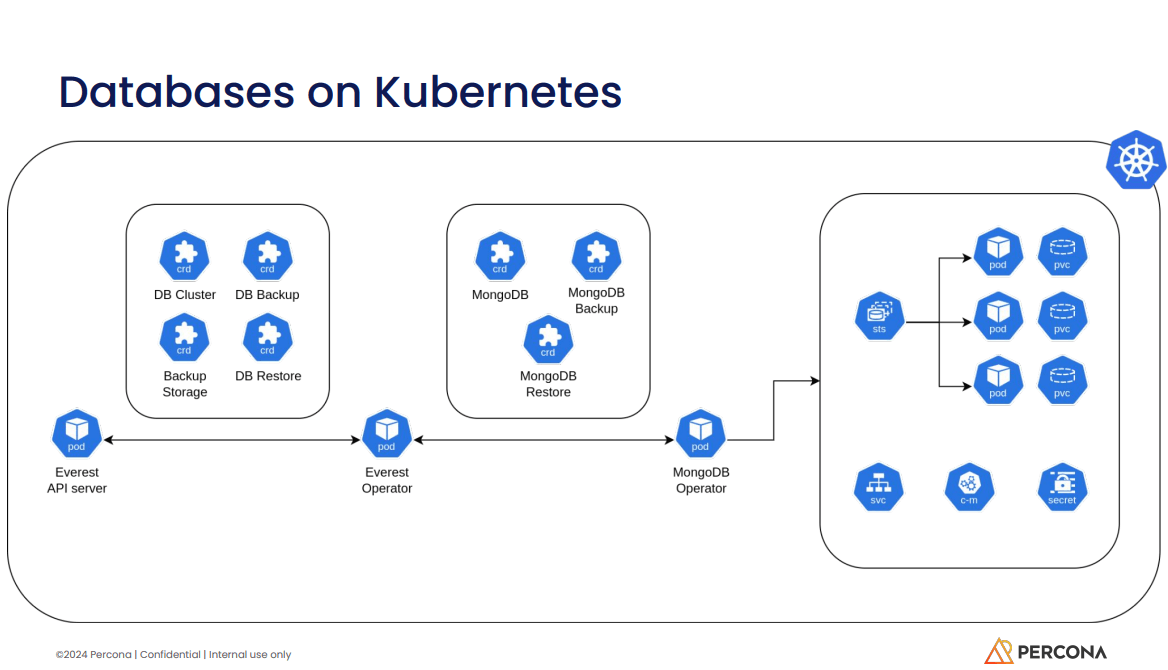

Remember the demo where you created a database through the UI? Here’s the magic behind it:

- You select your options in the wizard.

- The UI sends an HTTP request to the Everest API server.

- The API server creates a high-level “database cluster” Custom Resource (CR).

- The Everest operator translates this CR into a more detailed MongoDB CR.

- Finally, the MongoDB operator uses this CR to create the underlying kubernetes primitives (statefulsets, pods, services, etc.) needed to run your database.

The process for backups and restores works similarly. You interact with the UI, the API server handles the CRs, and the operators perform the actions with the necessary database knowledge.

The big picture: simplifying database management on Kubernetes

Percona Everest acts as your central hub, communicating with the appropriate operators for each database technology (MongoDB, PostgreSQL, MySQL). It not only provisions the database engines, but also streamlines those essential day-to-day operations we mentioned earlier.

This high-level approach lets you focus on using your database, not wrestling with complex kubernetes configurations.

Conclusion

As we’ve seen, deploying and managing databases on kubernetes can be remarkably straightforward with the right tools and understanding. Percona Everest, leveraging the power of kubernetes operators, empowers you to manage MongoDB, PostgreSQL, and MySQL instances with confidence. If you haven’t already, be sure to check out Peter’s article, where he explored the strategic considerations, cloud comparisons, and the open-source landscape for databases on kubernetes.

We extend our heartfelt gratitude to the speakers Peter Szczepaniak and Diogo Recharte for their insightful contributions to this discussion. Watch the full webinar here. Join us, Document Database Community on Slack, and share your comments below.

Related posts

Databases: Switching from relational to document models, Part 1

Relational Databases: review, ormalization, SQL language and joins.

Why a Document Database Community is Essential for Modern Application Development

Joining a document database community can help you stay up-to-date with the latest trends and developments in this field, and enable you to become a better developer.

Subscribe to Updates

Privacy Policy

0 Comments