How about more insights? Check out the video on this topic.

The cloud has revolutionized how we store and access data. However, with a growing number of cloud-based tools and services, ensuring your database plays nicely with others can become a challenge. This is where interoperability and compatibility come to the rescue.

In a recent webinar, “The Importance of Interoperability and Compatibility in Database Systems,” Jonah H. Harris, Co-Founder of Nextgres, highlighted the critical role these concepts play in a cloud-first world.

This article will explore why interoperability and compatibility are essential for maximizing your cloud databases’ potential. Discover how these principles can lead to faster technology adoption, increased productivity, and significant cost savings for your organization.

From simplicity to siloed systems: A cautionary tale

Remember the good old days? Back when databases and development platforms were like peas in a pod – perfectly matched and working seamlessly together? In the era of mainframes and COBOL, everything felt simple and intuitive. Oracle Forms and Oracle Database all spoke the same PL/SQL language, eliminating the headaches of incompatibility and complex deployment.

As technology advanced, businesses demanded more functionality and specialized applications. The one-size-fits-all approach of early database systems became ineffective. Enterprising developers saw this gap and offered a solution: brand new databases designed specifically for the emerging needs. Venture capitalists, ever opportunistic, fueled this trend with hefty investments.

However, this rapid innovation created an unforeseen consequence: a chaotic landscape of incompatible systems. These new databases couldn’t communicate with each other, effectively isolating data and making management a nightmare. Data governance became a critical concern, with the need to track data lineage across disparate systems to address security vulnerabilities. This is the cautionary tale of siloed systems – a problem that interoperability and compatibility aim to solve.

The interoperability answer: Breaking free from data silos

The story takes a turn here. While those specialized databases did excel at solving specific problems, their isolated nature led to a critical issue: data fragmentation and the challenge of data governance. This, in turn, created a form of vendor lock-in.

Forward-thinking companies and developers recognized the need for a different approach. What if these new databases could coexist and communicate with the existing systems? This interoperability and compatibility would allow them to seamlessly integrate into existing environments while ensuring applications continued to function flawlessly. The result? A surge in adoption.

Here’s the key takeaway: businesses are hesitant to invest in entirely new databases that disrupt their existing infrastructure. By prioritizing interoperability and compatibility from the outset, developers can create systems that seamlessly integrate with current setups, minimizing disruption and accelerating adoption. This ensures applications, systems, and workflows continue to operate smoothly – all thanks to the power of interoperability and compatibility.

The cloud factor: Why integration becomes paramount

The narrative now shifts towards the cloud revolution. Organizations, in their pursuit of agility and competitiveness, are constantly seeking new technologies. These technologies, while promising, often create a new challenge: managing a growing number of diverse systems.

This concept of “data estates” is crucial for maximizing the benefits of cloud deployments compared to on-premise solutions. It ensures a smooth transition between cloud and on-premise environments, as well as seamless integration with existing and developing applications.

Cloud adoption played a significant role in highlighting the importance of interoperability and compatibility. Initially, businesses struggled to migrate their traditional on-premise databases to the cloud in a cost-effective way, especially considering the early cloud licensing models from database vendors.

This gap gave rise to cloud providers, particularly the hyperscalers (large cloud service providers like Amazon Web Services, Microsoft Azure, and Google Cloud Platform), stepping in with their self-managed database services. These services aimed to replace some of the on-premise options.

Today, in this cloud-centric development era, enterprises prioritize features like HTAP (Hybrid Transaction/Analytical Processing), AI/ML (Artificial Intelligence/Machine Learning), deployment, development, and monitoring functionalities offered by cloud vendors. The specific database itself becomes less of a concern. Cloud providers offer managed services like Amazon RDS (Relational Database Service), Google Cloud SQL, and Azure’s database variants, which provide familiar database options (like MySQL or PostgreSQL) with additional cloud-specific features.

The key takeaway here is that businesses are primarily interested in leveraging the comprehensive functionality of the cloud environment, not necessarily the underlying database technology. They also seek to avoid rewriting applications during migration. This focus on functionality is what makes the integration decision – ensuring seamless communication between various systems – critical when moving to the cloud.

The cloud migration hurdle: Rewriting applications vs. integration strategies

The narrative now tackles the real challenge – migrating to the cloud without extensive application rewrites. Remember, for businesses, effort translates to cost. This is why the focus needs to shift beyond just database migration.

While moving data itself is a relatively straightforward process (transferring data from Oracle to PostgreSQL, for instance), rewriting applications to adapt to new environments is a complex and expensive undertaking. This is the often-overlooked hurdle that this article aims to address.

To navigate this challenge, we’ll delve into the different types of interoperability and compatibility. There are four key categories, each building upon the previous one:

Protocol level compatibility:

This foundational layer allows systems to communicate with each other. It’s not about the underlying network protocol (like TCP/IP), but rather the specific protocols used for communication within database systems. Examples include Oracle’s SQL*Net protocol, SQL Server’s TDS protocol, and those used by PostgreSQL and MySQL. Many systems implement protocol-level compatibility, allowing applications to connect and interact as if they were using the same native database. However, this level of compatibility offers a limited benefit.

Limitations of protocol compatibility: Why it’s just the first step

While protocol compatibility establishes a connection between systems, it offers a limited level of integration. Let’s explore why this is the case:

- Basic network communication: Protocol compatibility allows your application to connect to another system and perceive it as the original system from a network perspective. This facilitates a low level of integration with minimal changes, typically limited to updating drivers.

- Limited functionality: The true value of interoperability lies beyond basic connection. Protocol compatibility, on its own, doesn’t guarantee that your application can interact with the new system in a meaningful way.

- Incompatible functionality: Consider connecting to a system using the MySQL protocol. Many systems offer MySQL protocol support, but if you can’t execute the same queries you would on a true MySQL database, the functionality remains limited. Similarly, even if you can run the same SQL query, but it behaves differently on the new system, your application won’t be able to treat it as a genuine MySQL database.

Why does protocol compatibility exist despite limitations?

A common question arises: why would companies implement protocol-level compatibility if applications wouldn’t fully function? The answer lies in resource constraints:

- Resource-constrained development: Building a new database, especially from scratch, is a significant investment. Software companies often grapple with limited resources and funding. Engineering all the supporting tools and drivers adds another layer of expense.

- Leveraging open-source resources: Take Postgres, for instance. It offers well-developed drivers for various programming languages and tools (JDBC, ODBC, etc.). By implementing the Postgres protocol, a company developing a new system can leverage these existing drivers, significantly reducing development costs. Even if their database’s SQL dialect or behavior differs slightly, they benefit from the free open-source engineering efforts poured into these drivers. In essence, protocol compatibility provides a foundational layer of communication.

Interface compatibility

We’ve established that protocol compatibility allows systems to connect and communicate. But for a truly seamless experience, we need to go deeper. This is where interface compatibility comes into play.

Interface compatibility focuses on ensuring that systems speak the same language – not just at the basic protocol level, but also in terms of how they interact with data. The specific details of interface compatibility vary depending on the database type.

Understanding SQL dialects and extensions

For SQL databases, interface compatibility revolves around the SQL dialect. While most databases adhere to a standard SQL foundation, they often offer functionalities unique to their platform, typically implemented as extensions to the standard.

For applications to fully utilize another system, these vendor-specific extensions need to be supported at the interface level. This ensures that the application can leverage the full capabilities of the new system without requiring code modifications.

Compatibility beyond SQL: NoSQL and RESTful interfaces

For NoSQL databases, interface compatibility translates to supporting commands specific to that system, like those used in Redis or Memcache. Similarly, in a RESTful environment, interface compatibility focuses on replicating the endpoints used by a system like Elasticsearch. By mirroring these endpoints and data models, applications can interact with the cloned system seamlessly, minimizing the need for code changes.

The benefits of transparent interaction

Interface compatibility, including tools like GraphQL, plays a critical role in minimizing application rewrites. Applications can continue utilizing their existing SQL code, database commands, or SDKs. This translates to a transparent user experience, where applications appear to function identically regardless of the underlying database system.

However, interface compatibility isn’t the final piece of the interoperability puzzle. While it facilitates seamless interaction and a positive user experience, another layer of compatibility is needed to fully support applications – behavioral compatibility.

Beyond interfaces: Achieving behavioral compatibility for seamless migrations

While interface compatibility minimizes application rewrites, it’s not the final hurdle. For applications to function flawlessly after a migration, we need to ensure behavioral compatibility.

What is behavioral compatibility?

Behavioral compatibility goes beyond simply issuing the same SQL command and receiving a response. It focuses on ensuring that the response itself – the results returned by the database – matches what the application expects.

A common example lies in null handling within Oracle databases. Several projects have attempted to make Postgres fully compatible with Oracle at the behavioral level. This is because achieving true compatibility requires mimicking Oracle’s specific behavior to allow existing applications to function seamlessly. Simply executing the same SQL code in Postgres can yield significantly different results compared to Oracle, highlighting the importance of behavioral compatibility.

Behavioral compatibility: The key to streamlined migrations

Behavioral compatibility becomes paramount when considering database migrations, whether transitioning from Oracle to Postgres, SQL Server to Postgres, or even migrating internally developed applications.

The biggest challenge most companies face during migrations is testing. While advancements in AI offer promising solutions for automated test case generation, the real dilemma lies in applications written decades ago.

In large enterprises, these legacy applications were often developed 10 to 30 years in the past, and the original developers might no longer be with the company. The very functionality of these applications can become a mystery, making it difficult to predict how they’ll behave in a new database environment.

This uncertainty is a major deterrent to database migrations. Companies hesitate to move to a different database if they’re unsure whether these critical, yet poorly understood, applications will function as expected. The prospect of modifying application code can further complicate the process, potentially invalidating existing tests and maturity levels.

Here’s where protocol and interface compatibility come to the rescue. They offer a solution:

- Minimize rewrites: By leveraging protocol and interface compatibility, companies can avoid extensive application rewrites. Applications can be pointed towards the new system while continuing to operate as before.

- Reduce migration risks: This approach significantly reduces the risk associated with database migrations. Applications can be tested in the new environment without the need for code modifications, allowing for a smoother and more confident transition.

The pitfalls of incomplete compatibility: Why behavioral compatibility matters

We’ve established that minimizing rewrites through protocol and interface compatibility offers significant benefits. However, a critical question remains: how do we ensure the application actually behaves as expected in the new environment?

Beyond functionality, ensuring correct behavior

Consider the Oracle and Postgres examples. Even if an application continues to function after a migration, it doesn’t guarantee it’s functioning correctly. While various tools can help create or augment test cases, achieving true behavioral consistency is essential.

Without behavioral compatibility, application code modifications might still be necessary. This highlights the importance of behavioral compatibility as it allows applications to behave as anticipated in the new database environment.

A real-world example of incompatible silos

This concept becomes even clearer with a real-world example:

- Fragmented user profile systems: Imagine a company’s user profile system, a critical application component built years ago. As technology evolves and new application needs arise, new development teams emerge. These new teams, unaware of interoperability best practices, create entirely new user profile services that are incompatible with the existing system.

- The downside of siloed development: This siloed approach results in multiple versions of the same service, each storing data differently and functioning independently. Some versions might be outdated, while others receive occasional bug fixes. The consequence? A collection of “half-baked” systems that create unnecessary complexity.

The solution: Backward compatibility by design

This situation could have been easily avoided if developers had prioritized backward compatibility from the outset. By ensuring the new user profile service integrates seamlessly with the existing system, the company could have consolidated its user data and streamlined its application landscape.

The importance of standards and communication:

The solution lies in establishing clear standards and fostering better communication across development teams. This collaborative approach minimizes the risk of creating incompatible systems and ensures a more efficient and unified data infrastructure.

The holy grail: API compatibility for seamless integration

We’ve explored the different levels of compatibility – protocol, interface, and behavioral. Now, we have reached the pinnacle – API compatibility.

API compatibility: The unifying force

API compatibility acts as the unifying force, bringing all the previous forms of compatibility (protocol, interface, and behavior) into perfect alignment. It represents a holistic approach to compatibility, often referred to as the “unified approach.”

This concept is gaining significant traction, with industry analysts like Gartner and even hyperscalers (large cloud providers) actively discussing its importance.

API compatibility: The dream realized

API compatibility is essentially the holy grail of interoperability. Imagine this scenario:

- You take your existing application.

- You point it towards a new database system.

- The application simply works flawlessly.

No code changes are required. Everything operates seamlessly, or more accurately, transparently, from the application’s perspective. The transition to the new system is invisible to the application itself.

The tangible benefits of compatibility

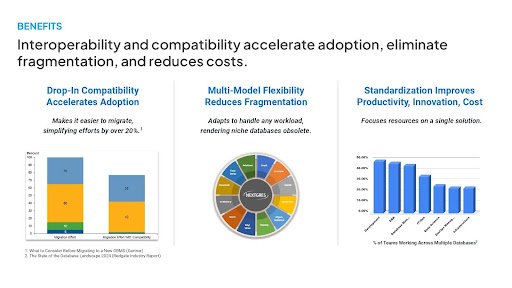

The benefits of interoperability and compatibility are substantial. A few years ago, Gartner conducted a study (with a more recent update in 2020) that highlighted the power of drop-in compatibility:

- Faster adoption and easier migrations: The study revealed that drop-in compatibility accelerates the adoption of new database systems and simplifies migration processes by over 20%. A significant portion of this improvement stems from reduced application rewrites and less extensive testing requirements.

- Cost savings: In the enterprise world, projects often involve millions of dollars. A 20% reduction in migration costs through compatibility translates to significant savings for companies, as evidenced by Enterprise Database Corporation (EDB) frequently citing this study.

- Reduced data fragmentation: The trend towards multi-model databases, exemplified by Postgres with its extensive extensions, offers the potential to handle diverse workloads with a single system. This reduces the need for specialized databases, leading to less data fragmentation.

The ultimate advantage of API compatibility:

The true power of API compatibility lies in its ability to make multi-model databases seamlessly compatible with existing specialized databases. This eliminates the need for application rewrites altogether. Organizations can reap the benefits of consolidation without the burden of application modifications.

In essence, API compatibility offers a powerful solution for streamlining application integration and simplifying database migrations, empowering organizations to leverage new technologies without sacrificing efficiency or incurring excessive costs.

The case for API compatibility: Streamlining workflows and reducing costs

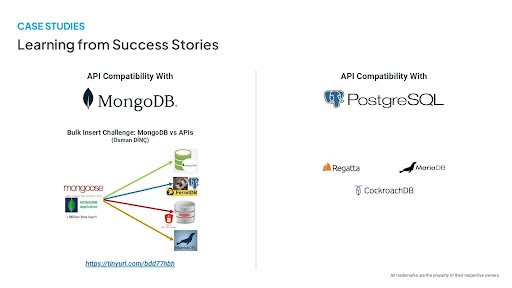

Let’s solidify the importance of API compatibility by exploring a real-world example: FerretDB, a product designed to bridge the gap between Postgres and MongoDB.

The Postgres and MongoDB overlap

- Widespread Postgres adoption: A significant number of companies (estimated to be over 124,000) utilize Postgres. Among these users, a large subset (around 80%) also leverages MongoDB.

- MongoDB workload overlap: For many companies, the workload they handle within MongoDB doesn’t necessitate its performance capabilities. This overlap leads to a lot of duplicated effort.

- The cost of duplicate databases: The third graph likely illustrates the burden of managing and writing code for multiple databases. Redgate’s industry report confirms this, highlighting that half of all developers work across various databases, hindering productivity.

The chain reaction of multiple databases

The ramifications of using multiple databases extend beyond developer efficiency:

- DBA burden: Database Administrators (DBAs) are responsible for managing these systems. Introducing a new database like MongoDB, potentially managed by developers unfamiliar with its administration, creates additional burdens for the existing DBA team.

- Increased costs: Companies often need to hire additional personnel to manage these disparate systems, leading to a chain reaction of increased costs and human resource overhead.

- Data fragmentation and governance: Multiple databases contribute to data fragmentation, further complicating data governance and incurring additional associated costs.

API compatibility: Preserving workflows and reducing overhead

API compatibility offers a solution to these challenges:

- Preserved workflows: Most companies have established workflows and procedures. API compatibility ensures these workflows continue to function seamlessly with compatible databases. Deploying a new system, especially an internal one, often requires significant DevOps effort to establish new management processes. Compatibility eliminates this need.

- Reduced DevOps costs: Managing and deploying compatible systems leverages existing workflows, minimizing the need for extensive DevOps work, particularly for newer or internal databases. This translates to significant cost savings.

Beyond app development: The operational impact

A crucial aspect often overlooked in discussions about database compatibility is the operational overhead:

- Focus on app development: Traditionally, discussions about database migrations or adoption center around application development. The operational overhead associated with managing these systems is often neglected.

- High operational costs: Managing multiple databases incurs significant ongoing costs. API compatibility helps minimize this burden by enabling existing workflows to function effectively with compatible databases.

Degrees of compatibility: Choosing the right approach

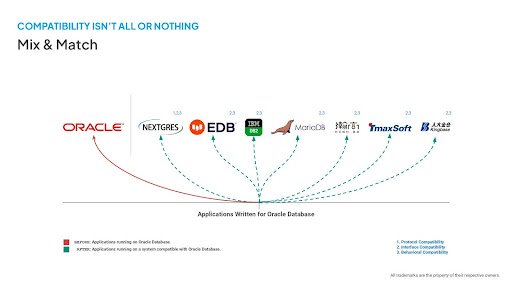

While API compatibility offers the most seamless experience, it’s important to remember that compatibility exists on a spectrum, not as an absolute requirement. Let’s delve deeper into this concept.

Levels on the compatibility spectrum

The various compatibility layers – protocol, interface, and behavioral – represent a spectrum, not a rigid hierarchy. Organizations can leverage different levels depending on their specific needs and priorities.

Success stories in partial compatibility

Several products demonstrate the effectiveness of achieving compatibility at different levels:

- NEXTGRES (your system): Your product achieves success with a combination of protocol, interface, and behavioral compatibility. This allows users to migrate applications from Oracle with minimal disruption by enabling them to continue using existing tools and drivers.

- Enterprise Database Corporation (EDB): EDB, the market leader in this space, focuses on interface and behavioral compatibility. While this approach necessitates swapping out Oracle-specific tools and drivers, it has proven successful in facilitating application migration from Oracle.

Choosing the right fit for your needs

The optimal level of compatibility depends on the specifics of your situation:

- Considering your applications: As you rightly pointed out, the ideal approach hinges on the functionalities and development style of your applications. There’s no single solution that universally applies.

- Tailored compatibility: Organizations can prioritize the types of compatibility that hold the most value for their unique requirements.

The value of protocol compatibility

For instance, if your primary objective is to capitalize on existing driver infrastructure when building a new system, then protocol compatibility alone might be sufficient. In this scenario, you would gain the benefit of leveraging established drivers without needing to invest resources in creating new ones from scratch.

Compatibility in action: Success stories and real-world examples

We’ve discussed the different levels of compatibility and their potential benefits. Now, let’s explore some real-world examples that showcase the power of compatibility in action.

The 1 billion row challenge: Highlighting compatibility

A recent blog post centered around the “1 Billion Row Challenge” provides a compelling illustration of compatibility’s value. This benchmark specifically compared MongoDB and various Mongo API-compatible systems.

Beyond MongoDB: A Range of Compatible Options

The blog post emphasized the aspect of compatibility by demonstrating how the test was conducted:

- Baseline approach: The test was initially executed using the standard MongoDB approach.

- Exploring alternatives: The focus then shifted to Mongo-compatible systems, a diverse group with varying degrees of compatibility. Several options were tested, including DynamoDB and others.

- Code changes for compatibility: Notably, the blog post showcased the specific code modifications required (if any) for each compatible system to run the test successfully. This transparency is invaluable for developers.

In some cases, like FerretDB and MariaDB, no code changes were necessary. Even Oracle required only minor adjustments. This reinforces the key advantage of compatibility: writing an application once and minimizing the effort required to adapt it for use with different databases.

Performance vs. rewrites: A critical consideration

The blog post effectively highlights the trade-off between application rewrites and performance implications. By showcasing the minimal code modifications needed for compatibility, it encourages developers to consider alternative databases without sacrificing significant development effort. We highly recommend checking out this insightful blog post.

Beyond Postgres compatibility: The rise of Regatta

The discussion then expands beyond MongoDB compatibility to the broader landscape of Postgres-compatible databases. While numerous options exist (more than the logos previously shown), a recent one deserves attention: Regatta.

Regatta: Emerging from stealth mode

Regatta has been in stealth mode for a considerable period, generating industry buzz but remaining largely unseen. This emerging player highlights the ongoing development and innovation within the database compatibility space.

Real-world examples showcase the power of compatibility

Let’s solidify the concept of compatibility by exploring some compelling success stories:

The 1 billion row challenge: A benchmark for compatibility

A recent blog post centered around the “1 Billion Row Challenge” offers a valuable illustration of compatibility’s significance. This benchmark specifically pitted MongoDB against various Mongo API-compatible systems.

Beyond MongoDB: A spectrum of compatible options

The blog post highlighted the importance of compatibility by detailing the testing process:

- Baseline test: The test commenced using the standard MongoDB approach.

- Exploring alternatives: The focus then shifted towards Mongo-compatible systems, encompassing a diverse range of options with varying degrees of compatibility. Examples included DynamoDB and others.

- Transparency through code: Notably, the blog post showcased the specific code modifications required (if any) for each compatible system to function successfully within the test. This transparency is invaluable for developers evaluating alternative databases.

In some cases, like FerretDB and MariaDB, no code changes were necessary. Even Oracle only necessitated minor adjustments. This reinforces the core benefit of compatibility – writing an application once and minimizing the effort required to adapt it for use with different databases.

The balancing act: Rewrites vs. performance

The blog post effectively emphasizes the trade-off between application rewrites and performance implications. Demonstrating the minimal code modifications required for compatibility, it encourages developers to consider alternative databases without sacrificing significant development resources. We highly recommend referring to this insightful blog post for further details.

Beyond Postgres compatibility: The rise of Regatta

The discussion broadens beyond just MongoDB compatibility to encompass the wider landscape of Postgres-compatible databases. While numerous options exist, a recent noteworthy addition has emerged: Regatta.

Regatta: Stepping out of stealth mode

Regatta is a brand-new database designed as a one-stop solution for Online Transaction Processing (OLTP), Online Analytical Processing (OLAP), and Hybrid Transaction/Analytical Processing (HTAP). Interestingly, Regatta opted to leverage Postgres protocol and syntax for compatibility.

While their behavioral compatibility might not be flawless (some unique Postgres transaction behaviors might not be replicated perfectly), they gain significant advantages:

- Leveraging open source benefits: By adopting established standards, Regatta benefits from the vast amount of open-source Postgres development work accumulated over the past few decades. This translates to a more mature and feature-rich foundation upon which to build their innovative database solution.

Compatibility: A bridge for innovation

Regatta exemplifies how compatibility empowers new database solutions to integrate seamlessly into existing application ecosystems:

- Seamless integration: By adhering to Postgres protocols and syntax, Regatta becomes readily usable within existing application stacks that already rely on Postgres. This minimizes disruption and streamlines adoption for organizations.

A growing trend: Compatibility fuels innovation

Regatta isn’t alone in this approach:

- CockroachDB’s strategic move: CockroachDB, another innovative database solution, didn’t initially prioritize Postgres compatibility. However, they recognized the value of leveraging established functionalities offered by Postgres. While not a complete Postgres clone architecturally, they do emulate Postgres to a significant degree, enabling them to tap into the existing Postgres ecosystem.

- YugabyteDB: building on a strong foundation: Yugabyte, a distributed database solution, leverages its Postgres foundation. They’ve built upon Postgres functionalities and capabilities while rewriting the storage layer for a distributed architecture. This distributed approach allows them to emulate Postgres protocol, interface, and most behavioral aspects, with minor exceptions in specific distributed execution scenarios.

These examples demonstrate how forward-thinking database solutions are embracing compatibility standards to achieve faster adoption, smoother integration, and a quicker path to market success. They effectively bridge the gap between cutting-edge technology and the established database landscape.

Let’s shift gears and delve into a specific compatibility project you spearheaded at MariaDB – the Xgres project.

Xgres: Capitalizing on the growing Postgres market

MariaDB once offered a distributed SQL engine named Xpand, designed for MySQL compatibility. However, recognizing the decline of the MySQL market and the rise of Postgres, the strategic question arose: how to capture the burgeoning Postgres market?

Emulating Postgres for seamless integration

The Xgres project, similar to Yugabyte’s approach, aimed to replace the current Postgres backend with a distributed database engine. This strategy yielded several advantages:

- Leveraging existing Postgres applications: Organizations could continue utilizing their existing Postgres applications without modification.

- Scalability made easy: When these applications reached the limitations of a single node, Xgres would offer a seamless transition to a distributed architecture for linear scalability. The burden of sharding and other complexities would be eliminated.

API compatibility with nuances

The Xgres project mirrored others’ approach by achieving API compatibility with Postgres. However, it’s important to acknowledge the inherent challenges:

- Behavioral compatibility nuances: Certain Postgres behavioral aspects, like sophisticated features for delayed constraint handling, can be intricate to emulate perfectly within a distributed database environment. This highlights the fact that compatibility can exist on a spectrum, with varying degrees of success in replicating specific functionalities.

The future: A landscape of Increasing Interoperability

As we look towards the horizon, several trends are evident:

- The rise of AI: The field of Artificial Intelligence is experiencing explosive growth and development.

- Evolving programming languages: New programming languages are constantly emerging and being refined.

While we won’t delve into each of these areas in detail, the overall direction is clear: interoperability and compatibility are becoming increasingly crucial.

Cloud vendors embrace compatibility

Cloud vendors are actively investing in creating self-managed services, many of which are built upon open-source databases. However, to ensure their services remain valuable, they recognize the critical need for compatibility:

- Open source with added value: Cloud vendors provide additional functionalities and advantages on top of the open-source foundation.

- Maintaining application compatibility: To be truly useful, these cloud-based services must remain compatible with the applications organizations have already developed. For this reason, cloud vendors prioritize, at minimum, backward compatibility with established APIs.

Conclusion

This article explored database compatibility, showcasing its importance. Compatibility isn’t all-or-nothing; organizations can choose the level (protocol, interface, or behavioral) that best suits their needs. Real-world examples like the 1 Billion Row Challenge and innovative solutions like Regatta highlight the benefits. As AI, new languages, and cloud services emerge, interoperability will be paramount. Cloud vendors prioritize compatibility for seamless integration. By embracing compatibility, organizations can navigate the evolving database landscape with agility and unlock the full potential of their data.

Questions & Answers:

Q1: Are there legal risks with building API-compatible databases?

Yes, potential legal issues exist. The Oracle vs. Google case highlighted conflicting legal opinions on API compatibility. However, open-sourcing the API code weakens claims of proprietary ownership. A lawsuit challenging an open-source, compatible implementation would be a significant test case.

Q2: Do established database vendors benefit from API compatibility?

No, established vendors might not appreciate compatibility. They see their APIs as a competitive advantage (lock-in). However, if their core functionalities aren’t truly differentiated, compatibility can force them to innovate and offer additional value beyond the API.

Example: A large bank wanted a database that could handle both relational and key-value storage, reducing complexity. Compatibility allows new vendors to offer such capabilities, challenging established single-purpose solutions.

Vendor frustration: Established vendors might be frustrated by compatibility because it weakens their lock-in strategy, especially when their core functionalities aren’t irreplaceable. Compatibility can push them to innovate and provide more than just an API.

Q3: Does API compatibility empower users against vendor lock-in?

Yes, compatibility fosters user choice and weakens vendor lock-in. Users can leverage alternatives if a vendor becomes aggressive.

Example: Companies might be hesitant to sign with Oracle due to lock-in concerns. Compatibility with alternatives like Postgres offers them an exit strategy.

Beyond lock-in avoidance: Compatibility offers other benefits:

- Upward migration path: Compatibility allows users to start with an open-source solution (e.g., Postgres) and seamlessly migrate to a more powerful option (e.g., Oracle) if their needs grow.

- Reduced rewriting: Users can develop applications using functionalities offered by a compatible database (e.g., Oracle) and later migrate to a more affordable option (e.g., Postgres) without significant code changes.

Compatibility as a double-edged sword: Compatibility benefits both users and vendors, offering flexibility and innovation opportunities.

Q4: Can compatibility benefit established database vendors?

Yes, compatibility can create a win-win situation. Here’s how:

- De facto standards: Widespread compatibility can elevate a vendor’s technology to a de facto standard. This strengthens their brand recognition and industry influence (e.g., Mongo and its API).

- Increased adoption: Compatibility attracts new users who might be hesitant to adopt a proprietary solution due to lock-in concerns. This expands the overall market for the technology.

Example: Imagine being an Oracle. Compatibility with your technology encourages broader adoption, even if users choose alternatives. This expands the overall market for document databases, a space where Oracle might also offer competitive solutions.

Branding benefits: Compatibility acts as a powerful branding tool. When other vendors tout “MongoDB-compatible” solutions, it reinforces the recognition and success of MongoDB itself.

Q4: How do compatible solutions handle situations where the original vendor deprecates functionalities?

This can be a challenge for compatible solutions:

- User reliance on deprecated features: Users might still require features even if the original vendor deprecates them.

Open source advantage (moderator’s view):

- Focus on user needs: Open-source solutions prioritize user needs and might continue supporting deprecated features, offering an advantage over the original vendor.

Challenges of compatibility:

- Maintaining compatibility: Supporting deprecated features complicates maintaining compatibility with the evolving original system.

- Bug compatibility: In some cases, replicating the specific behavior of bugs might even be necessary to ensure user applications function as expected (humorously termed “bug compatibility”).

Related posts

Bridging Two Worlds: An Introduction to Document-Relational Databases

Explore the fusion of document and relational databases. Bridging Two Worlds: An Introduction to Document-Relational Databases.

Unveiling Apache Cassandra: A Deep Dive into Distributed Efficiency

Delve deep into the architecture of Apache Cassandra, a distributed key-value store that serves as a critical component in managing and analyzing large volumes of data in high-scale applications, using Nvidia as a case study.

Open Standards and Licensing in Database Technology with Mark Stone

Explore the world of open standards and licensing in the dynamic realm of database technology. Join Mark Stone in discussing the future of document databases.

The Current State of MongoDB Alternatives, and Two Years of FerretDB.

Discover FerretDB, an open-source MongoDB alternative. Explore its vision, compatibility, and roadmap for agile databases.

Exploring the Power of Postgres for Document Storage: A Viewer’s Perspective

Discover the incredible capabilities of Postgres for document storage. Explore Postgres Document Storage for efficient data management.

Document databases with a convergence of Graph, Stream, and AI

Document databases revolutionized data in the last decade. Sachin Sinha (BangDB) delves into their convergence with Graph, Stream, & AI, highlighting benefits and emerging challenges.

An Interview with Bruce Momjian: Non-relational PostgreSQL

Presenting to you an enlightening conversation with Alexey Palashchenko, Co-founder & CTO at FerretDB and Bruce Momjian, Vice-President at EDB

Comparing CosmosDB, DocumentDB, MongoDB, and FerretDB as Document Database for Your Stack

A blog post by David Murphy (Udemy) about the document databases benefits and use cases for various technologies.

Databases: Switching from relational to document models, Part 3

Migration to document-oriented databases: best practices and common mistakes.

Databases: Switching from relational to document models, Part 2

Document Databases: Introduction, flexible schema, document model and JSON documents.

Subscribe to Updates

Privacy Policy

0 Comments